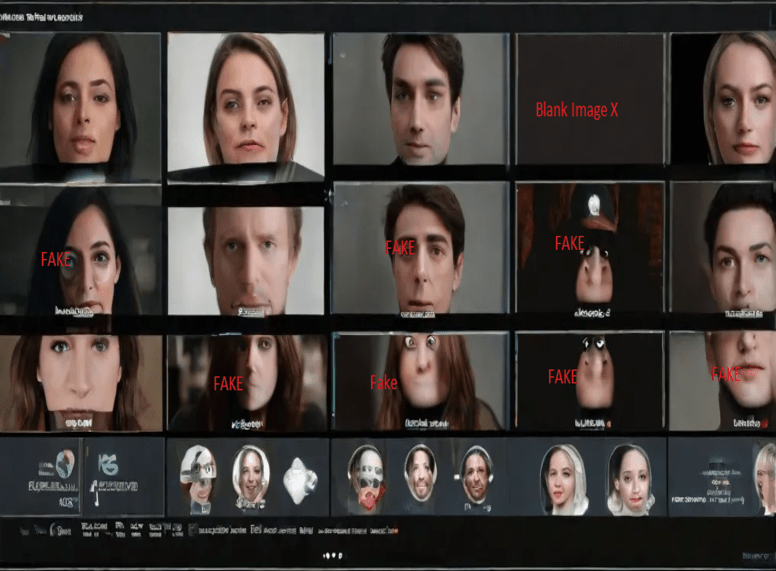

In today’s digital landscape, the rise of deepfakes—highly realistic but fake videos and audio—poses a significant threat to media integrity. These manipulated media forms can spread misinformation, influence public opinion, and harm reputations. To combat this, advanced AI tools are being developed to detect and verify the authenticity of media content. This article explores the latest innovations in AI technology designed to identify deepfakes and maintain the trustworthiness of media.

The Threat of Deepfakes

Deepfakes utilize sophisticated algorithms to create convincing fake media, often indistinguishable to the human eye. These fake media forms can be used maliciously, undermining trust in digital content. Addressing this challenge is crucial for ensuring that the information we consume is accurate and reliable.

Cutting-Edge AI Algorithms

AI algorithms play a pivotal role in detecting deepfakes. These algorithms analyze video and audio recordings for signs of manipulation, identifying inconsistencies that humans might miss. For instance, a deepfake video may show a person speaking, but subtle discrepancies in lip movements and voice synchronization can reveal the deception.

DeepTrace: Pixel-Level Analysis

One such tool, DeepTrace, employs AI to scrutinize videos frame by frame. By analyzing pixel patterns, it can detect anomalies indicative of tampering. This detailed analysis helps identify videos that have been altered, even when the changes are minute and imperceptible to viewers.

UC Berkeley’s Facial Analysis Tool

Researchers at the University of California, Berkeley, have developed a tool that focuses on facial expressions and movements. This AI can detect when a person’s facial movements are unnatural, a common sign of deep-fake manipulation. Such tools are essential for distinguishing genuine content from sophisticated forgeries.

Audio Deepfake Detection

Deepfakes are not limited to visuals; audio deepfakes are also a growing concern. AI tools are being created to identify manipulated audio recordings, which are often used in scams and fraudulent activities.

Microsoft’s Video Authenticator

Microsoft’s Video Authenticator is a prime example of this technology. It analyzes audio recordings for inconsistencies and manipulations, helping to verify the authenticity of spoken content. This is particularly important for preventing audio-based scams, where a fake voice might be used to deceive individuals.

Verifying Media Authenticity

Beyond detection, some AI tools are designed to verify the origin and authenticity of media content, ensuring its credibility from the source.

Truepic: Authenticity Verification

Truepic’s platform leverages AI to authenticate photos and videos. When content is captured using Truepic’s app, the AI analyzes and embeds metadata to confirm its genuineness. This makes it significantly harder for fake media to be passed off as real, providing a robust solution for maintaining media integrity.

The Importance of Media Integrity

Maintaining the integrity of media is crucial in today’s digital age, where misinformation can spread rapidly. AI tools for detecting and verifying deepfakes are essential for preserving trust in the media we consume. By identifying and exposing fake content, these technologies help ensure that we can rely on digital information for accurate and truthful communication.

Conclusion

The fight against deepfakes is ongoing, but with the development of advanced AI tools, we are better equipped to detect and prevent the spread of fake media. These innovations are vital for maintaining media integrity, ensuring that what we see and hear remains trustworthy in an increasingly digital world. As technology continues to evolve, so too will our ability to protect the authenticity of our media, fostering a more informed and truthful society.